A very simple question for this short post: what length pause (trend < 0) in global mean surface temperature could be simulated in a warming climate?

Several studies have suggested that around 5% of 10-year periods could show a cooling trend in future climate projections. Some examples have even shown periods of 17 years length can show a temporary cooling.

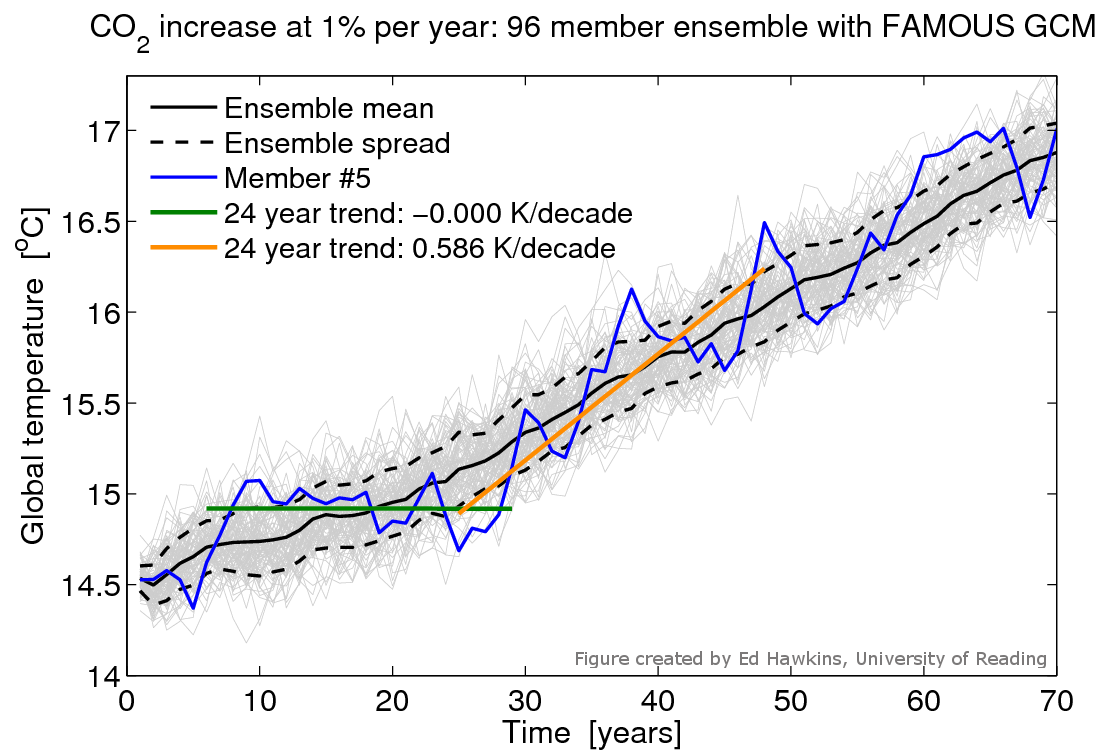

The figure below shows a period of 24 years with a (just) negative trend in a simulation which increases CO2 at 1% per year, using the FAMOUS GCM. This simulation is one of 96 identical simulations which only differ in a tiny perturbation (‘butterfly effect’) in the initial conditions. Several periods with negative trends lasting longer than 20 years are seen in this ensemble.

The magnitude of interannual variability in FAMOUS is likely a bit larger than observations, so this length of trend should not necessarily be considered possible in the real climate system, but exploring the mechanisms for the causes of the trend will certainly be of interest. Does the heat go into the deep ocean or is there a cloud effect affecting the top of atmosphere radiative balance?

More on these simulations to follow in a future post!

This model seems to have a warming in the global average temperature of over 0.7C in 3 years, from years 6-9. That’s more than the actual warming over the last 30 years, so I don’t think this can be regarded as a useful climate model, except for the purpose of ruling out some of the assumptions that went into it.

Hi Paul – yes, as I say in post, the variability in this model looks too high, but that understanding the possible mechanisms for the pause is definitely interesting.

Cheers,

Ed.

As I said on twitter, with a low enough climate sensitivity and a high enough variability you can get a pause of any length you like.

Yes, but this model also has quite a high TCR – about 2.4K.

Cheers,

Es.

Well if you want to be rigorous, with a low enough climate sensitivity and/or a high enough variability you can get a pause as long as you like. Obviously for a low sensitivity you can get away with a low variability and for a high sensitivity you need a high variability.

A TCR of 2.4 is a lot lower than some of the silly numbers that were being bandied around a decade ago.

I think a reverse experiment might be interesting as well. If there are simulations that fix anthropogenic forcings at pre-industrial levels then how often (if ever) do we see warming periods that resemble the recent warming period (1970’s-1990’s)?

At present it appears that we have a theory with time periods that correlate well with it and other periods were there is greater uncertainty. The tests you outline seem only to try to ‘explain away’ the troublesome observations rather than to really test the theory.

Hi HR,

You can already see the control simulations here:

http://www.climate-lab-book.ac.uk/2013/variable-variability/

Cheers,

Ed.

Thanks Ed but I’m greedy. I was hoping for 96 control runs of FAMOUS to have side-by-side comparisons, wishful thinking.

Hi HR, we do have 1200 years worth of control run with FAMOUS so that’s quite similar.

Ed.

So I thought I’d have a go at an example of what I suggested above, i.e. the opposite experiment to what Ed is proposing here. A comparison of an unforced model control run with the late 20th century warming period. I have got access to very little data, only the CMIP3&5 data available on KNMI Climate Explorer. There are no individual pre-industrial control (PICntrl) runs for CMIP5, and only two for CMIP3 (ECHAM5 and HADGEM1). Echam5 would be a sceptics dream, there is 500 years of highly variable data and scanning it by eye there seems to be lots of warming periods that far exceed the late 20th century observed one. But it looks far too unrealistic to be useful. That leaves only 140 years of the HADGEM1 PICntrl. So remember this is a simulation NOT forced by anthro forcings and I’m comparing it to observed data. I picked a 22year period (1975-1997) that seems to be well representative of the observed warm period and I’ll compare that to a 22year period in the simulation.

Hadcrut4 (obs) (1.8oC/decade)

http://oi44.tinypic.com/vhw9ef.jpg

Hadgem1 (unforced simulation) (1.2oC/decade)

http://oi44.tinypic.com/2tc1j.jpg

So with only one 140years of unforced data you can find unforced warm periods that are 66% of the late 20th warm period.

I suspect Ed can probably find stronger warming in his 1200years of control data if his model is similar to HADGEM1. So following the logic that I think Ed is pursuing in the experiment he’s proposing here it seems perfectly fair that one possible conclusion could be that up to to 66% of the recent warming could be natural. I think there are other ways of interpreting the data but I don’t think this is stretching things too far.

I just think there’s no harm, and lots to be learnt, that if you design a test to try to understand data you are struggling to explain then it should also be used to test the data you believe you have a good explanation for as well just in case there are plausible alternative explanations.

Hi HR,

You can see the observations compared with many different control simulations in the Variable variability post. I suspect you can see periods of change similar to the late 20th century warming, but not so easily for the whole 160 years.

cheers,

Ed.

Sure Ed I think most people would be surprised if an unforced simulation matched the 20th century. Just to be clear I wasn’t trying to suggest that climate change can be solely explain by natural variability. It’s not forcings OR internal variability, there is a role for both.. And that’s my problem, that’s clearly what you’re exploring in connection to the present pause but go back to the warming phase and internal variability is dropped in favour of an explanation that almost solely comes from ghg forcing. You complain on your next post about the failure of science to properly communicate the role of internal variability. I think that directly comes from the certainty with which the late 20th century warm period is explained.

You seem here to have a tool to explore what’s going on in both periods.

Hi HR,

Agreed – there is a role for forcings and internal variability in the whole 20th century and beyond. About 2.5% of 25-year periods in the FAMOUS control simulation show a similar trend to 1974-1998, with the same caveats about too large inter-annual variability in this model.

We are currently writing up the first results from these experiments. I think we would be happy to release the data after that paper is submitted.

cheers,

Ed.

BTW are the FAMOUS simulation data online?

A good way to see how (un)realistic the model is would be to plot it and the observations on the same graph. Lucia has done this in a (rejected) comment on the misleading Easterling and Wehner paper, showing that they chose a model with greatly inflated fluctuation, see her fig 1 at

http://rankexploits.com/musings/2013/easterling-and-wehner-mists-of-time/

Hi Paul,

Unfortunately we don’t have a 20th century simulation with FAMOUS to compare. But, if you examine the time-series of the control runs in the Variable variability post, then FAMOUS would be similar to the more variable models, and the observations are also shown there.

cheers,

Ed.

PS. I think it is a pity that GRL don’t accept comments – have also wanted to comment on a couple of papers recently, but not been able to!

Ed I don’t know if you go back to old posts to anaswer questions but this one seems the best place to ask this question.

It’s arisen because I came across another example of a pause in temperature in another model output.

http://www.scilogs.de/klimalounge/files/MRIscenario1.png

This is similar to the one you link for the ECHAM5/MPI-OM output in the ESSENSE experiment ( the ” 17 years length” link in the post) . Trying to dig deeper on these I realised both of these ‘pauses’ suffer from a possible problem. In the model runs data about forcings is input as historical data and when this runs out as projected data. There is a type of ‘pasting’ of two data sets to form the input data. I understand this is completely necessary but it does cause a potential issue. I’m mainly thinking of aerosol data here because these seem to have the biggest variability in data sets and have the potential to cause a sudden jump that shows a quick response in the temperature output (similar to the impact of a volcano). It’s difficult for me to investigate this fully but the link I show (which is the MRI model) seems to show that effect. See this link

http://www.mri-jma.go.jp/Dep/cl/cl4/IPCC-AR4/simulations2.html

The documentation for the ECHAM model that I’ve found doesn’t give enough detail to be able to check this out. See http://www.knmi.nl/publications/fulltexts/essence_1_v2.2_copy1.pdf

It strikes me that these two examples are exactly the worst points in the model output to choose to look for the effects of internal variability on short temperature trends when they have the potential to be impacted by inhomogenities in the input data.

Hi HR,

Yes – I agree there is the potential for there to be a small forcing shock when transitioning from the historical ‘scenario’ to the future scenarios. But, the scenario developers do try and ensure the major forcings are smooth across the transition. There is still the possibility that the implementation of certain forcings in different models may be less smooth, but it is thought to be a small effect.

You can graph the forcings used in CMIP5 here:

http://tntcat.iiasa.ac.at:8787/RcpDb/dsd?Action=htmlpage&page=compare

cheers,

Ed.