A new analysis by Clara Deser and colleagues (accepted for Nature Climate Change), provides some fantastic visualisations of the crucial role of natural variability in how we will experience climate.

Essentially, Deser et al. perform 40 simulations with the same climate model and the same radiative forcings, but only change the initial state of the atmosphere. The only difference between the simulations will therefore be due to chaotic and rather unpredictable variability (the butterfly effect). However, the trends in the simulations over the next 50 years can be remarkably different, with some showing little warming, and some showing a strong warming. It must be cautioned that they only use a single model, and other models might show different ranges of behaviour, but there is no indication that the variability in CCSM3 – the model used – is wildly wrong.

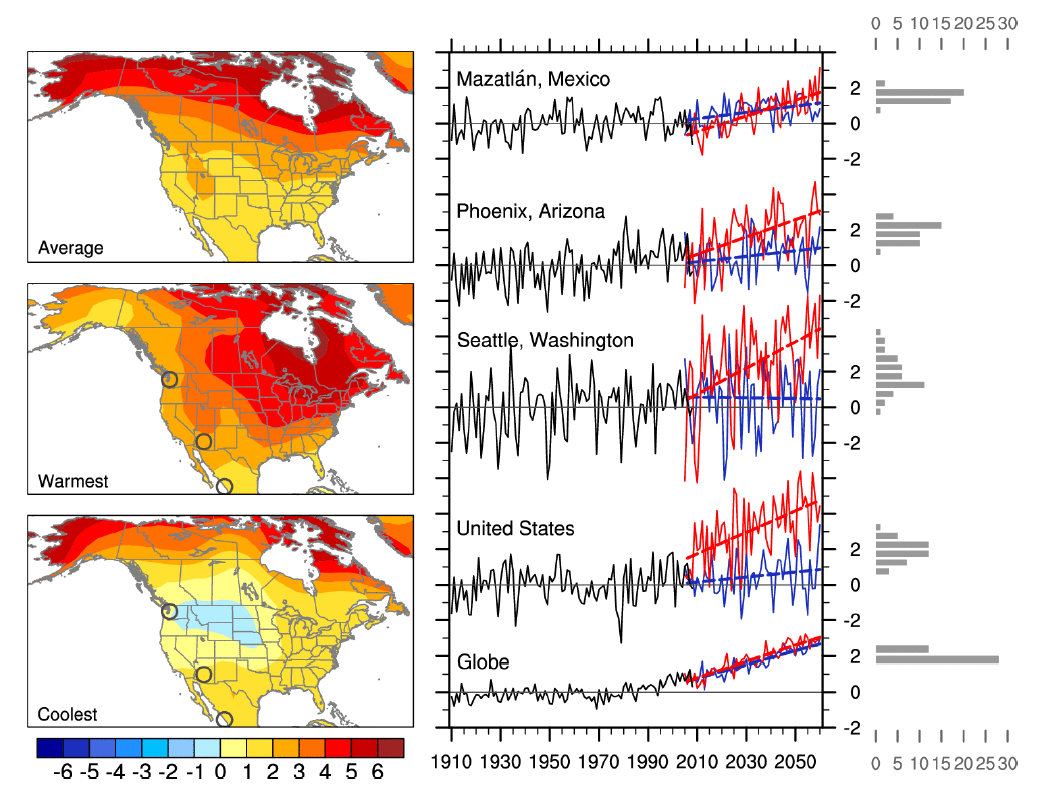

The examples they show are for North America (Fig. 1 below) – showing the average, warmest and coolest simulation for the U.S. with maps of the trend in winter (DJF) temperature. The timeseries show the maximum and minimum trends for the locations indicated, with the histograms showing the distribution of simulated trends. Globally, all the simulations warm by very similar amounts, but regionally the trends are extremely diverse. Versions of the figures for summer temperature and winter precipitation are also shown in the paper.

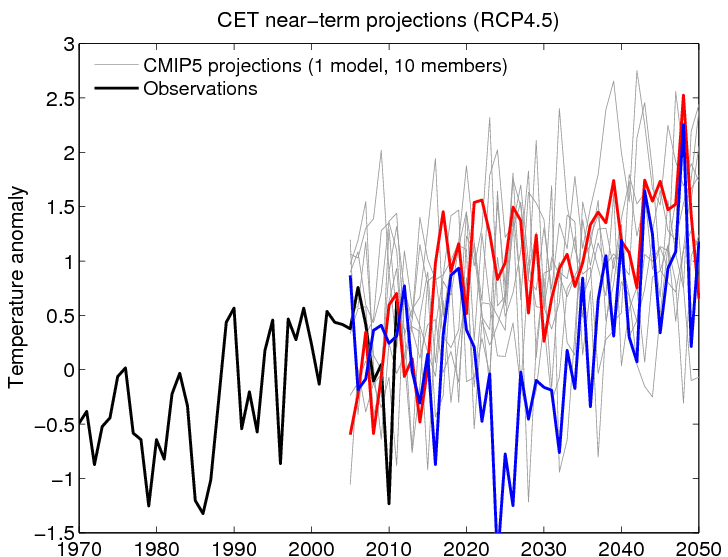

You could probably select any region of the globe and make similar plots, so I made a timeseries for annual temperature for the UK (Fig. 2 below). Here I have used just 10 simulations with a single climate model (CSIRO 3.6, different from the one used by Deser et al.), with identical radiative forcings, but focussed on the UK (using Central England Temperature, CET). Again, there is a wide range of behaviours for the different simulations – one (in red) shows warming, followed by a flatter period, whereas another (blue) shows a sharp cooling, followed by a rapid warming. Each of these paths could be considered equally plausible, and demonstrates the uncertainty in future climate which is attributable to rather random fluctuations in the weather. It also highlights that the climate experienced in one location is not necessarily representative, as in all these simulations the globe as a whole is warming by a similar amount.

Deser, C., Knutti, R., Solomon, S., & Phillips, A. (2012). Communication of the role of natural variability in future North American climate Nature Climate Change, 2 (12), 888-888 DOI: 10.1038/nclimate1779

So, climate models are chaotic – which means that they can’t be used to predict the future. Is this really news in the field that gave birth to chaos theory? On top of the chaos, if one imposes an artificial “forcing”, then on average you just get back some function of the forcing, particularly if you cherry-pick which runs to average.

Clearly, if you play around with initial conditions, you can get whatever output you desire.

I had assumed that “natural variability” was a euphemism for an external influence beyond our control or understanding, but it turns out to be much worse than that! the “variability” is entirely internal, totally unnatural and the cause is quite obvious. To describe the chaotic behavior of climate models as “natural variability” is more than a little disingenuous don’t you think?

Hi Tom,

Yes, the models and the real climate system are chaotic, which means we have to provide ranges for the future, rather than exact predictions. It’s not massive news, but highlights the magnitude of how large the effect of variability might be, which isn’t quantified or appreciated often enough.

Not quite sure what you have against the term ‘natural variability’. It is entirely natural as it would happen without any external influence. It is beyond our control, but not our understanding as we can quantify its magnitude and causes.

Ed.

So, climate models _are_ chaotic. If you impose an external forcing, on average the models reflect that forcing over any particular time frame. No surprises there!

When you use the term “natural variability”, is what you really mean “sensitive dependence on initial conditions” of climate models?

You also state that, “uncertainty in future climate … is attributable to rather random fluctuations in the weather”. Really? Is it the models or the climate that is random?

Yes, climate models have always been chaotic! Modelled ‘natural variability’ normally refers to the variability that is generated by climate models with no changes in external forcing. But, you also see this variability superimposed on any trend due to external forcings (i.e. each individual simulation above shows relatively hot and cold years around the trend). But, changing the initial conditions produces different ‘realisations’ of the possible natural variability, as shown by the different lines in each of the panels above, and this can produce quite different futures for regional climate, each consistent with the applied external forcings, even though the global mean shows very little difference. And, yes, the real climate shows chaotic (or random) behaviour, as do the climate models.

Hi Ed,

If any very very long control/stability runs have been archived for some similar type of model, the stable part could be chopped up into 55 year segments which could also be split into two sets by taking odd and even alternates that were semi-independent at each segments year zero, and see if they exhibit a similar spread or variance, or perhaps better a similar spread of the ratios of local/regional to global variance.

That would I think be different in that the oceans would be semi-independent at each year zero, which would perhaps boost divergence, but there might be a suppression of divergence as they would lack the jolt due to the atmosphere/ocean mismatch that their description suggests. I quote:

“slightly different initial conditions in the atmospheric model (taken from different days during December 1999 and January 2000 from the same 20th century CCSM3 run)”

There is a way of reading that phrase that would suggest that all but one of the starting conditions were highly unlikely.

I wonder just how big a jolt they are giving the model and whether they are overly exciting some resonant modes or patterns of persistence.

Alternatively, given that such an archive could be found regional/city spectra/periodograms could be obtained to check for a high the degree of local/regional variance at the 55 year mark, again perhaps better with respect to the global.

It is just my thinking, that were such data available, it would not cost any new model time.

Alex

Hi Alex,

Thanks! A couple of related points there:

1) The ‘shock’ – this type of daily shift perturbation is done regularly. However, I prefer to add some tiny random noise to the SSTs to generate ensembles instead. There is a chance that a daily shift may produce a small shock, especially in regions where there is a large seasonal cycle, e.g. the Arctic. But, I don’t think this is a big deal here.

2) The authors have a related paper on the same ensemble – Deser et al. – which does a similar comparison to the one you suggest using the control simulations. There they actually use an atmosphere only simulation which uses climatological (but seasonally varying) SSTs and demonstrate that a similar spread in 55 year segments is found. This is surprising, and suggests that the atmosphere and land surface alone can produce this long timescale variability.

3) We are running a similar set of simulations with another model, and see similar features – more to come soon!

cheers,

Ed.

Thanks Ed,

To be honest, my first reaction to your comments relating to the second Clara Deser et al, paper was: “Oh No!”

An atmospheric model generating such long term variance without oceanic cooperation was the option I left out in my first comment for it raises the spectre of non-ergodicity.

Interestingly Grant Branstator gets an acknowledgement in the paper, which isn’t suprising in that he somehow gets the computer time to run very long non-warming computer runs sometimes highly unrealistic ones (e.g. a world without seasons) that are experiments that rely on ergodicity e.g. his Fluctuation Dissipation Theory work.

To me, there is chaos and there is chaos, two types of chaos, in one the climate generates weather that has well defined statistics, in the other weather that lacks well defined statistics, e.g. lacks convergent means, variances, etc.. I think that this relates to the Fermi-Pasta-Ulam problem/paradox and whether systems sufficiently more complex than the ones they considered are inherently ergodic.

Branstator’s results, if I understand him correctly, suggest that there is hope, and not only do the statistics converge but the effects of shocks are much more linear (in the sense of additive) than perhaps they have any right to be.

So what do we have here.

Much of the paper is directed not to my more general concerns but to the issue of signal to noise in a warming world. I find the noise much more interesting, and in a sense more objective, than the signal. The later being our current subjective obsession. Hence I wish we publically archived, and made much more use of the boring millenia of unforced model runs.

That said, from the little that I have gleaned so far, she seems to be saying that we know that local/regional variance is patterned, has has spatial modes, but is temporally stochastic, which she covers in a slideshow with voice over archived from an old Kavli Symposium, and that this noise component of the temperature modes has a “surprising” amount of low frequency variance as we go poleward.

Given that this applies to periods at least semi-centenial and perhaps longer we would still be in some doubt as where the baseline, the mean, could be said to resides in high latitude temperature record even if the world were not warming.

What I mean here is that although the mean exists it might be hard to constrain without undisturbed temperature histories lasting for hundreds of years. Its estimate converges very slowly.

Hmm, I think that this might be a flavour of what you have been trying to tell us!

I am still puzzled, if not quite so troubled, that this has a purely atmospheric component. The spectra in the paper deal I think only with the dynamical pressure modes which have a cut off period around one year which seems too short to be relevant to the long term temperature patterns. I should like to see the same for the noise component derived for the spatial modes of the temperature variance for non-warming model runs.

I think papers like these, yours and others, are important. It seems that Artic temperatures can flap around like a sail in a breeze and this is at least in part due to some long term persistence in the atmospheric component.

If that be the case, highlighting Artic changes as evidence for global changes could prove to be somewhat reckless. Is this not also something you have been trying to tell us?

I am pretty concerned about some developments in the attribution of weather events to AGW. There is a real risk of the relationships proving to be transitory. I understood, and was pretty convinced by the theoretical relationship between temperatures and GHGs when there was very little to no evidence of an anthropogenic effect. In fact I think that was before any of our current temperature global histories existed.

Alex

Thanks again Alex.

I agree entirely that we could learn a lot more from the control simulations. I have tried to collect a few over the years – the longest is 5940 years long with the HadCM3 model, and that is publicly archived at BADC (annual means only). The CMIP3 and CMIP5 control simulations are also available, and some are 1000 years long, but many are less than 500 years.

Deser et al are about to start on a new set of ensemble simulations for the future, but also starting in the past – these should give us a much better idea about whether the spread in these simulations is realistic or not. It is a surprise that the atmosphere and land can give centennial variability (not sure how the cryosphere may be allowed to vary in these simulations) – and many people don’t believe this. As for the Arctic – it does seem that the variability can be particularly large there in model simulations – but we lack the long timescale records to really tell. We must always be aware of this!

I am also concerned by the trend to attribute any ‘event’ – I think there is better evidence for some, e.g. EU 2003 and Russia 2010 heatwaves, which were extremely large. But, e.g. Hurricane Sandy is not a good case, as New York has had stronger storms hit in the past.

And, as an aside, it was Guy Callendar in 1938 who first measured the rise in global temperatures!

cheers,

Ed.

Hi Ed, and thanks again.

You wrote:

“It is a surprise that the atmosphere and land can give centennial variability (not sure how the cryosphere may be allowed to vary in these simulations) – and many people don’t believe this.”

The second Deser et al paper you mention above states an annual SST and sea ice cycle is repeated without change for the 10,000 year simulation:

“In addition to the 40-member CCSM3 ensemble, we make use of a 10,000-year control integration of CAM3, the atmospheric component of CCSM3, at T42 resolution under present-day GHG concentrations. In this integration, sea surface temperatures (SSTs) and sea ice are prescribed to vary with a repeating seasonal cycle but no year-to-year variability. The SST and sea ice conditions are based on observations during the period 1980–2000 from the data set of Hurrell et al. (2008). As in CCSM3, CAM3 is coupled to the Community Land Model (CLM; Oleson et al. 2004).”

It also indicates in Fig 9 that the TS is very similar to the free ocean simulations in terms of 56 year trend variance. I am not sure what is in the Community Land Model that could support long term persistence. I would doubt that thermal energy storage would suffice as the characteristic time scale is likely to be months to years not decades to centuries unless the coupling of the surface is very weak, (see below). Perhaps it grows forests, or has long term patterns in soil moisture.

I am not sure what many people think or why they should think such a thing should be unlikely.

Unless I am mistaken there is a flavour of this type of behaviour in some of Isaac Held’s posts. I think he ponders whether the coupling between the surface and the atmosphere weakens towards the poles.

That the ocean in your example here doesn’t seem to matter to the land temperatures towards the poles may be because it doesn’t, it is not sufficently coupled.

I would have thought that a certain amount of waywardness in polar temperatures would be welcomed even if problematic to explain. I thought there was a history of sustained and then reverting temperature shifts of the odd degree or more. A polar region inherently prone to such changes would need less in the way of positive feedbacks to create and destroy glaciations and hence could do so more quickly. I have to confess that it is my prejudice that invoking strong ice and or CO2 feedbacks gives me a timing problem as I associate (probably correctly) strong and much lagging positive feedbacks with a great lengthening of time constants which I deem to be incompatible with the suddeness of the deglaciations.

All that said, and much speculation it is, I must wonder whether the Artic region can really be said to have an average or baseline temperature more constrained than a couple of degrees, and whether that would really matter. If one can appeal to linearity in the sense of additivity a one degree forced change is still one degree warmer than without it, even if we don’t know what without it was ever going to be.

Alex

Thanks again Alex – I think you have identified the key issue – how strongly are the atmosphere and ocean coupled in reality, as a function of latitude, and do models represent this adequately? I know many who suspect that the atmospheric models do not respond strongly enough to SST variations, but without strong evidence to demonstrate this rigorously….

cheers,

Ed.

Hi Ed, and thanks again, unless this interests, I think I have got what I can from this thread.

Similar to the control runs there is what I consider to be another type of unloved data the climatological normals. For HadCRUT3 these were in a publically available file abstem3.dat which I am struggling to find on the current download page.

Naturally this contains the seasonal baseline with just 12 monthly values for each grid square and this is I think just as relevent to the coupling issue as the anomalies and perhaps more so.

The band of the Northern Westerlies, say the six rows straddling 45N, have a strong annual signal varying from a few degrees Celsius in amplitude over the most maritime squares to around 30 degrees (60 degrees peak-to-peak) over the most continental. Because that signal is large, at least over land, compared to the noise, which has been averaged over the 30 year baseline, the fundamental component, first harmonic, of the seasonal cycle can be extracted with some precision. How accurate it is would be a different matter.

That seasonal pattern can be extracted to form a phasor field, amplitude and phase angle to the solstice for each grid square.

It is I think reasonable to assume that an effect of the Westerlies is for the atmosphere to exchange phase and amplitude with the surface causing an eastwards transport of phase and amplitude. This seems to be the case judging from the phasor field. The most continental grid square, smallest phase angle, and large amplitude is in East Asia around Mongolia. That is east of the centre of the landmass as one would expect as it is as far downstream as one can get before the effect of the pacific is felt. I suspect that the large scale eddies cause some mixing in of Pacific phase as far inland as the eddy size.

Whereas I may be fooled concerning the accuracy of the data, the precision or resolution of phase seems good, perhaps to a couple of degrees or arc which is roughly a couple of days and similarly the amplitude may be resolvable to around a couple of degrees.

The pattern over the ocean is complicated. I expect phase and amplitudue is being dragged around by the gyres.

Now it is my suspicion, or prejudice, that a model that did not have the surface atmosphere coupling correct would struggle to reproduce the phasor field in the Westerlies band. I think it obvious that no or too little coupling would have continents which are too evenly continental and not reproduce the pattern of diminishing phase with increasing amplitude that stretches from Europe through to East Asia.

Given that we are talking about models with prescribed oceans and thinking about coupling between the surface and the atmosphere, using normals as opposed to anomalies might be a more powerful test for coupling.

To be fair CMIP did publish something on this to the effect that the models were not a million miles off but I think that the resolution was more like one month which is good for discriminating between maritime and continental but I think there is more resolution available in the data than that.

I think it would not cost a lot to map a 30 year segment from a relevent model run perhaps even a segment for the period 1961-1990 onto the Hadley 5 degree grid and extract the phasor field and see if it is compatible with an assumption of too little coupling.

Be warned, I have been hawking this idea around for some time and many have thought it wise to ignore it.

Alex

Thanks Alex – will think more about this suggestion! However, calculating the absolute temperatures are tricky – and I think they have stopped issuing them for HadCRUT4. From NOAA:

cheers,

Ed.

Hi Ed,

I was worried for a moment there. I think that failing to produce absolutes could be to shoot a hole in ones foot given the politics involved.

I have skimmed through the Colin P. Morice et al “Quantifying uncertainties in global and regional temperature change using an ensemble of observational estimates: the HadCRUT4 data set” paper.

Firstly, the station normals are still for the period 1961-1990 so the absolute normals recorded in abstem3.dat are still for the same baseline. It is available from this page:

http://www.cru.uea.ac.uk/cru/data/temperature/

along with CRUTEM3, 3v, 4 and 4v, and HadSST2.

I do hope that this state of affairs is as I have stated and continues to be so. Dropping the absolutes would be a mistake for the following reasons.

One of the CMIP criticisms of the models was their considerable variance from the absolute temperatures. Without these absolutes this would have become moot.

I personally have my doubts as to how well the models capture the seasonal pattern and the latitudinal contrast, that concern also would become moot. I have stated that this could be of some interest with respect to coupling and that would become closed to investigation.

I think I can understand the logic in going from gridded to station normals in as far as it improves the anomalies. I also understand that the normals are a bit vague, but that vagueness is small in terms of a ratio with the total variance due to the tropical/polar contrast and with the seasonal pattern over land.

I do not live in anomaly land, a world where it is almost the same temperature all year and at all latitudes and longitudes. My conception of climate is essentially everywhere local not global, were I asked to tell whether I could discriminate between the real world and a modelled world, the seasonal pattern and range would be a key deciding factor not the anomalies.

Lastly, given the nature of the debate, it would have seemed dubious to have rendered moot two key lines of argument as to whether the models produce an Earthly climate. If other teams fail to publish gridded absolutes that could be construed as being economical with the data and hence signify a lack of joined up thinking.

Thanks again,

Alex

Hi Ed,

Old post but you have just mentioned it on Twitter. Having read the referenced paper and watched here 2013 AGU Chapman Conference talk, to me the obvious question is to what extent the heat is preserved within each run. The global average is much more narrowly constrained than regional or point temperatures; is this simply a matter of averaging or is it because, within a run, when one area is warmer another is cooler? And, is the “other region” actually on the surface or in the ocean?

It’d be interesting if you have any updates on this work,

Ed.

Hi Ed,

Good question. I think that there are different types of variability – i.e. sometimes the heat will simply be moved around and averaged out in the global mean, and sometimes there will be a net imbalance at the top of the atmosphere causing a more widespread change in temperature. Different GCMs probably have a diversity of characteristics in this regard. We are starting to think about this a bit more at the moment.

This guest post is also relevant:

http://www.climate-lab-book.ac.uk/2014/toa-and-unforced-variability/

cheers,

Ed.